Are you tired of being held back by persistent pain? It’s time to regain control and embrace a life of comfort and relief! In this comprehensive guide, we will explore effective strategies to manage pain, empowering you to live life on your terms. From tried-and-tested techniques to cutting-edge approaches, we’ve got you covered! So, let’s dive in and discover how to conquer pain, boost your well-being, and unlock your full potential. Say goodbye to suffering and hello to a pain-free future!

Exploring the Power of Mindfulness Techniques for Pain Relief

Discover the incredible potential of mindfulness techniques for pain relief and take control of your well-being. By practicing mindfulness, you can develop a deeper awareness of your body’s sensations and learn to respond to pain more effectively. This mental focus can help you shift your perspective, reducing the intensity of the pain and promoting relaxation. Incorporating mindfulness exercises, such as deep breathing, progressive muscle relaxation, and body scanning, into your daily routine can greatly enhance your ability to manage pain. Don’t let pain hold you back – embrace the power of mindfulness and experience a transformative change in your pain management journey.

The Role of Nutrition and Supplements in Effective Pain Management

The role of nutrition and supplements in effective pain management cannot be overstated, as they provide the essential building blocks for a healthy body and mind. By optimizing your diet and incorporating specific supplements, you can significantly reduce inflammation, promote healing, and improve overall pain tolerance. Consuming a well-balanced, nutrient-dense diet that includes whole, unprocessed foods, rich in antioxidants and anti-inflammatory properties, can significantly impact your pain levels. Additionally, incorporating supplements such as omega-3 fatty acids, vitamin D, magnesium, and curcumin can further enhance your body’s ability to manage pain. Prioritizing your nutritional intake and supplement regimen can lead to a significant improvement in your pain management journey, ultimately promoting a healthier, pain-free lifestyle.

Uncovering the Benefits of Physical Therapy and Exercise for Alleviating Discomfort

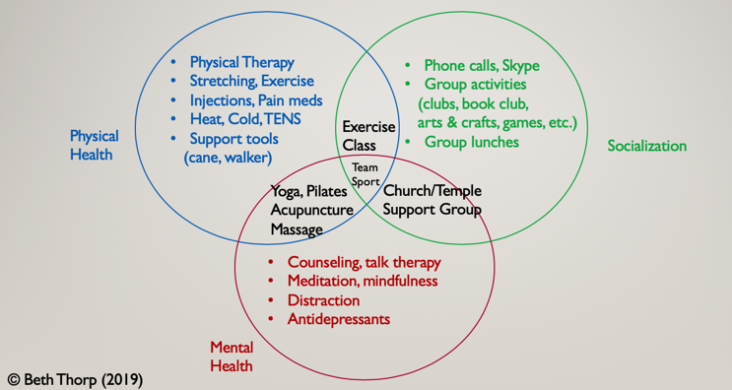

Discover the incredible advantages of physical therapy and exercise for alleviating discomfort and managing pain effectively. By incorporating a tailored exercise routine and working with a skilled physical therapist, you can target specific areas of pain and improve your overall physical well-being. A customized program not only strengthens your muscles and enhances flexibility, but also boosts endorphin levels to naturally reduce pain. Moreover, physical therapy aids in correcting posture, decreasing inflammation, and promoting healing. Embrace the power of movement and therapy for a pain-free life, and experience the long-lasting relief that comes with consistent and mindful practice.

Harnessing the Potential of Alternative Therapies for Pain Management Success

Discover the transformative power of alternative therapies in managing pain more effectively. By exploring options such as acupuncture, massage, chiropractic care, and even herbal remedies, you can significantly improve your pain management success without solely relying on traditional medications. These holistic approaches not only target the root cause of pain but also promote overall well-being and reduce stress, making them an ideal addition to your pain management plan. Embrace the benefits of alternative therapies by researching and integrating the most suitable methods into your daily routine, and experience lasting relief from discomfort while enhancing your quality of life.

Establishing a Support Network and Coping Strategies for Long-Term Pain Management

Establishing a strong support network is crucial for effective long-term pain management. Surround yourself with understanding friends, family, and healthcare professionals who can provide encouragement, advice, and assistance as you navigate your pain journey. Engage in online forums, support groups, and social media communities to connect with others who share similar experiences and can offer valuable insights. In addition, develop coping strategies such as deep breathing, meditation, and visualization techniques to help alleviate pain and reduce stress. By combining a solid support system with practical coping mechanisms, you’ll be better equipped to manage your pain and maintain a positive outlook on life.